Dataset Description

PersonPath22 is multi-person tracking dataset that is over an order of

magnitude larger than the most popular tracking datasets MOT17 and MOT20, while maintaining the high

quality bar of annotation present in those datasets.

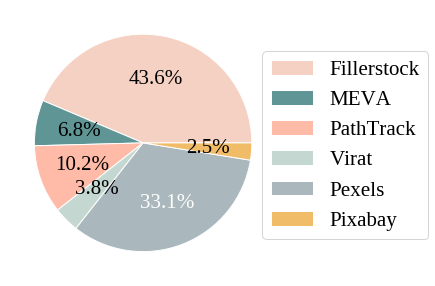

Video source composition: Videos are collected from sources where we are given the rights to redistribute the content and participants have given explicit consent.

Our dataset consists of 236 videos captured mostly from static-mounted cameras.

Approximately 80% of these videos are carefully sourced from scratch from stock footage websites and 20% are collected from

existing datasets.

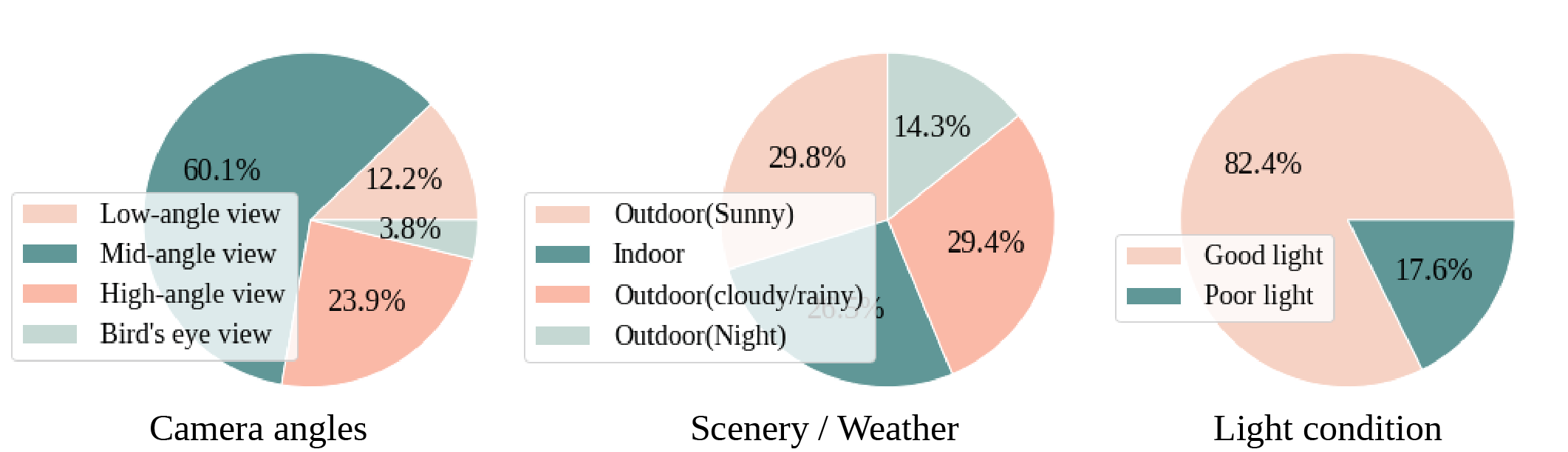

Video diversity: Videos in the dataset has large diversity in terms 1), camera angles (from birds-eye view to low-angle view); 2) scenery/ weather conditions (sunny, raining, cloudy, night); 3), lighting conditions as well as 4), crowd densities.

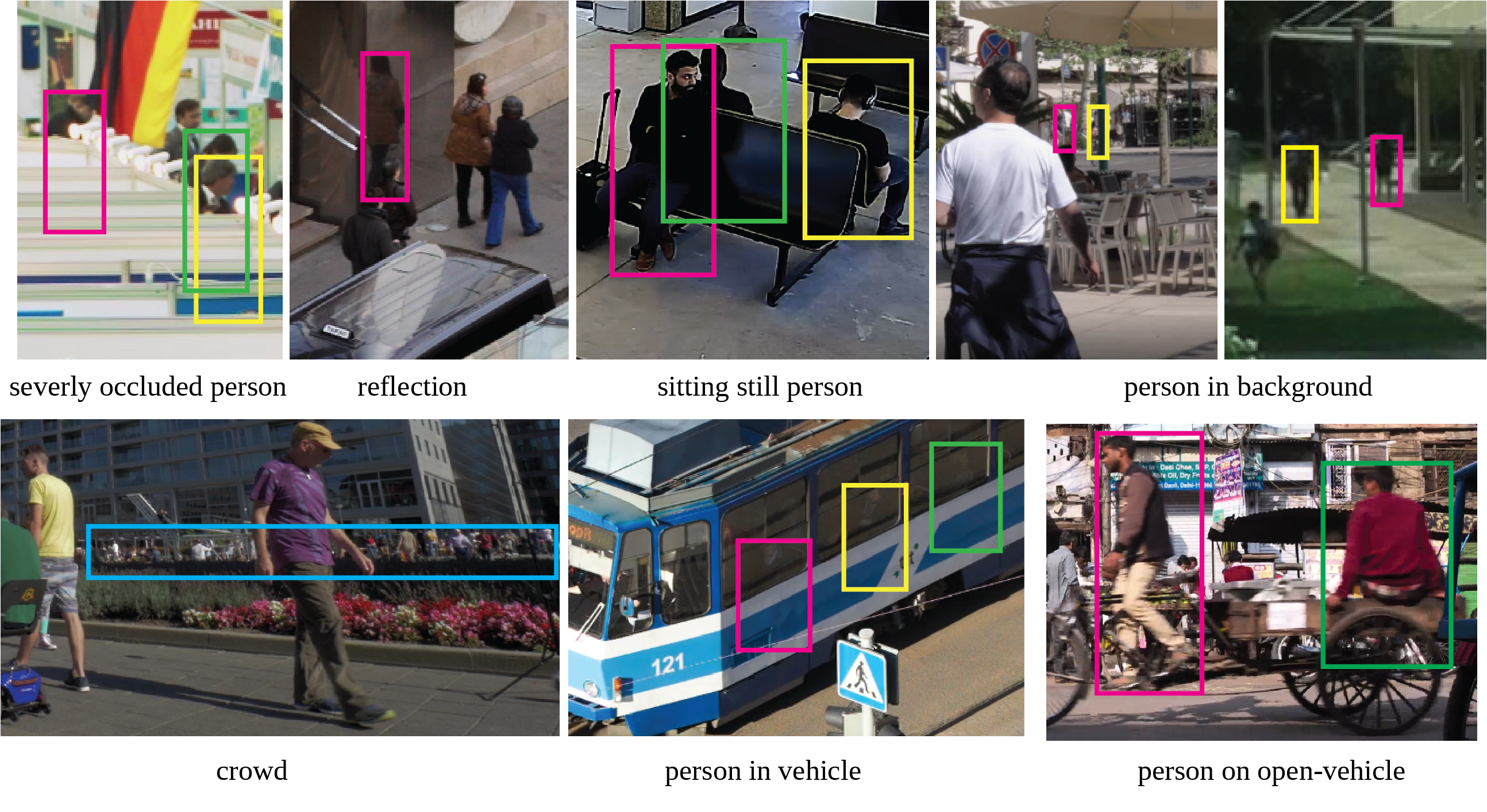

Person-level meta data: Videos in PersonPath22 dataset are exhaustively annotated with both amodal and visible bounding boxes as well as their unique identifiers. In addition, each person is further labeled with the following tags:

- Sitting / Standing still person

- Person in vehicle

- Person on open vehicle

- Reflection

- Severely occluded person

- Person in background

- Foreground/Normal person.